I've often seen questions from new BizTalk developers asking why they couldn't subscribe to an incoming message. Typically, the question actually revolves around why were the message property values not promoted as expected? And sometimes, for the new developer, the answer is because he or she forgot to use the XML Receive pipeline component and instead used the Pass Thru Receive pipeline component for the message being received.

So, we take away from this scenario the fact that one job of a receive pipeline component (XML, Flat File, etc) is to promote property values from the message to the message context, which makes those values available to subscriptions. And notice the Pass Thru Receive pipeline is named appropriately... it passes a message through directly to the message box without any processing and since the message is not identified, even if it is an instance of a deployed schema, no properties from the message are promoted.

Custom Pipeline Property Promotion

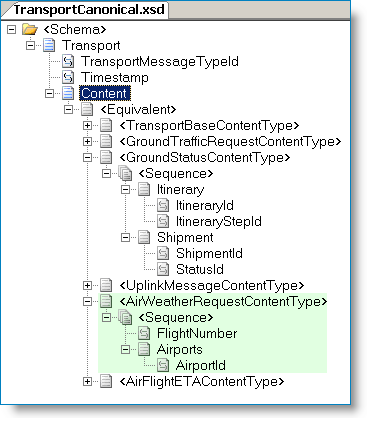

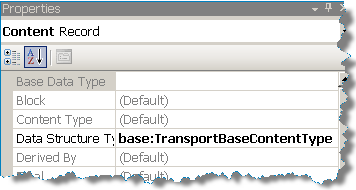

Recently I was developing a custom pipeline component when I discovered that the message properties were not being promoted. Well, it was a little more complicated than that because this particular component encapsulated the Flat File Receive disassembly pipeline component. This part worked, because in this case the Flat File Receive component handled property promotion of the resulting Xml message.

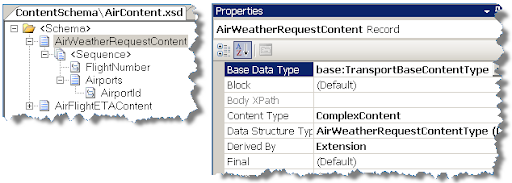

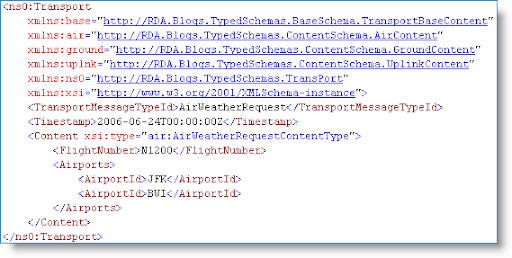

The other piece of the puzzle was that I also created a second Xml message on the fly to be emitted from the pipeline. It was this second message whose properties were not promoted correctly or at all. To add another layer of complexity this additional message could be one of a handful of different types. I wanted to dynamically promote its properties since not having to adjust a custom pipeline due to schema changes in the future makes for a robust solution.

Drill Down into Property Promotion

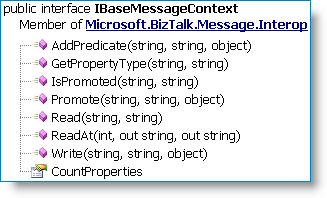

I realized that is up to my custom pipeline component to promote the properties for that second message since it is being created on the fly. If you've worked with custom pipeline component code before you'll think why not just use the IBaseMessageContext methods to promote properties? Well, that's the mechanism to actually affect the promotion but it is only part of the answer because those methods won't allow discovery of what field names are linked to the property schema(s). I realized that is up to my custom pipeline component to promote the properties for that second message since it is being created on the fly. If you've worked with custom pipeline component code before you'll think why not just use the IBaseMessageContext methods to promote properties? Well, that's the mechanism to actually affect the promotion but it is only part of the answer because those methods won't allow discovery of what field names are linked to the property schema(s). Remember that when you create promoted properties for your message you are linking an XPath from your message to a property name (element) in a property schema. So not only do we need to discover what promoted (and distinguished) properties are available for this second message being created, we also need to know what the XPath is to actually promote the value from the message to the message context! |

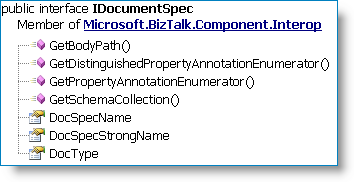

This is where the IDocumentSpec interface comes in. This interface contains the GetPropertyAnnotationEnumerator and GetDistinguishedPropertyAnnotationEnumerator methods that will give us a list of both the promoted and distinguished property names. From this list we can also determine the XPath to those values in our message. This is where the IDocumentSpec interface comes in. This interface contains the GetPropertyAnnotationEnumerator and GetDistinguishedPropertyAnnotationEnumerator methods that will give us a list of both the promoted and distinguished property names. From this list we can also determine the XPath to those values in our message. |

|

|

| Property Promotion Implementation |

Code Snippet

// Instead of hard-coding namespaces for various built-in

// BTS properties, here is an idea that caches the information

// the first time the the pipeline component runs.

//

private struct BTSProperties

{

public static BTS.InterchangeID interchangeId = new BTS.InterchangeID();

public static XMLNORM.DocumentSpecName documentSpecName = new XMLNORM.DocumentSpecName();

public static FILE.ReceivedFileName receivedFileName = new FILE.ReceivedFileName();

public static BTS.MessageType messageType = new BTS.MessageType();

public static BTS.SchemaStrongName schemaStrongName = new BTS.SchemaStrongName();

}

[ ... ]

// msgAuditTrail

// Second message created on the fly,

// an instance of IBaseMessage.

//

// xmlMsgAuditTrail

// Xml document instance.

//

// pContext

// IPipelineContext - provided to us during

// pipeline execution.

//

// auditTrailAssemblyQualifiedName

// String value for the schema type.

IDocumentSpec docSpecAuditTrail =

pContext.GetDocumentSpecByName(auditTrailAssemblyQualifiedName);

// Write document type to the message context

//

msgAuditTrail.Context.Write(BTSProperties.documentSpecName.Name.Name,

BTSProperties.documentSpecName.Name.Namespace,

docSpecAuditTrail.DocSpecStrongName);

msgAuditTrail.Context.Write(BTSProperties.schemaStrongName.Name.Name,

BTSProperties.schemaStrongName.Name.Namespace,

docSpecAuditTrail.DocSpecStrongName);

// WRITE DISTINGUISHED VALUES

//

// Iterate through each distinguished property and promote it from the message.

// Write distinguished properties prior to promoted properties. This is because

// if a promoted property is also distinguished it won't be promoted if the

// "write" is used last.

//

IEnumerator annotations =

docSpecAuditTrail.GetDistinguishedPropertyAnnotationEnumerator();

if (annotations != null)

{

while (annotations.MoveNext())

{

DictionaryEntry de = (DictionaryEntry)annotations.Current;

XsdDistinguishedFieldDefinition distinguishedField =

(XsdDistinguishedFieldDefinition)de.Value;

// Use annotation.Name or Namespace to get the data to

// promote from message

//

System.Xml.XmlNode contextNode =

xmlMsgAuditTrail.SelectSingleNode(distinguishedField.XPath);

if (contextNode != null)

{

// "Write" distinguished fields.

//

msgAuditTrail.Context.Write(de.Key.ToString(),

Microsoft.XLANGs.BaseTypes.Globals.DistinguishedFieldsNamespace,

contextNode.InnerText);

}

}

}

// WRITE PROMOTED VALUES

//

// Iterate through each promoted property and promote it from the message.

// Write distinguished properties prior to promoted properties. This is because

// if a promoted property is also distinguished it won't be promoted if the

// "write" is used last.

//

annotations = docSpecAuditTrail.GetPropertyAnnotationEnumerator();

if (annotations != null)

{

while (annotations.MoveNext())

{

IPropertyAnnotation propAnnotation =

(IPropertyAnnotation)annotations.Current;

// Use annotation.Name or Namespace to get the data to

// promote from message

//

System.Xml.XmlNode contextNode =

xmlMsgAuditTrail.SelectSingleNode(propAnnotation.XPath);

if (contextNode != null)

{

// "Promote" distinguished fields.

//

msgAuditTrail.Context.Promote(propAnnotation.Name,

propAnnotation.Namespace,

contextNode.InnerText);

}

}

}

External Links from MSDN

In addition to the BizTalk references needed to compile a custom Pipeline Component, you will need an additional reference to Microsoft.XLANGs.RuntimeTypes.

In addition to the BizTalk references needed to compile a custom Pipeline Component, you will need an additional reference to Microsoft.XLANGs.RuntimeTypes.  Creating a custom pipeline component is outside the scope of this post, though I've included some external links below. In our following example we retrieve the DocumentSpec object mentioned above from the pipeline context (

Creating a custom pipeline component is outside the scope of this post, though I've included some external links below. In our following example we retrieve the DocumentSpec object mentioned above from the pipeline context (

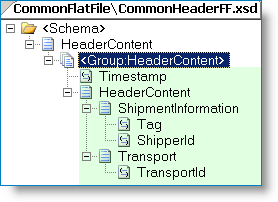

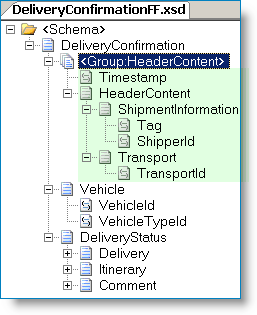

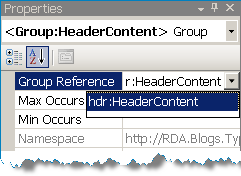

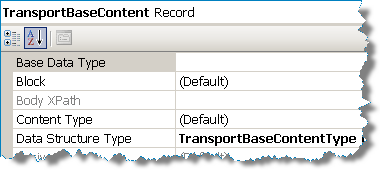

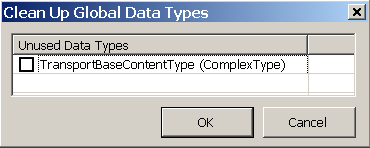

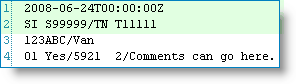

In our example BizTalk application we process many different kinds of flat file messages and the first two rows of all the messages contain the same format. So, we would like to create a reusable global definition.

In our example BizTalk application we process many different kinds of flat file messages and the first two rows of all the messages contain the same format. So, we would like to create a reusable global definition.